摘要:

昇思MindSpore AI框架中使用openai-gpt的方法、步骤。

没调通,存疑。

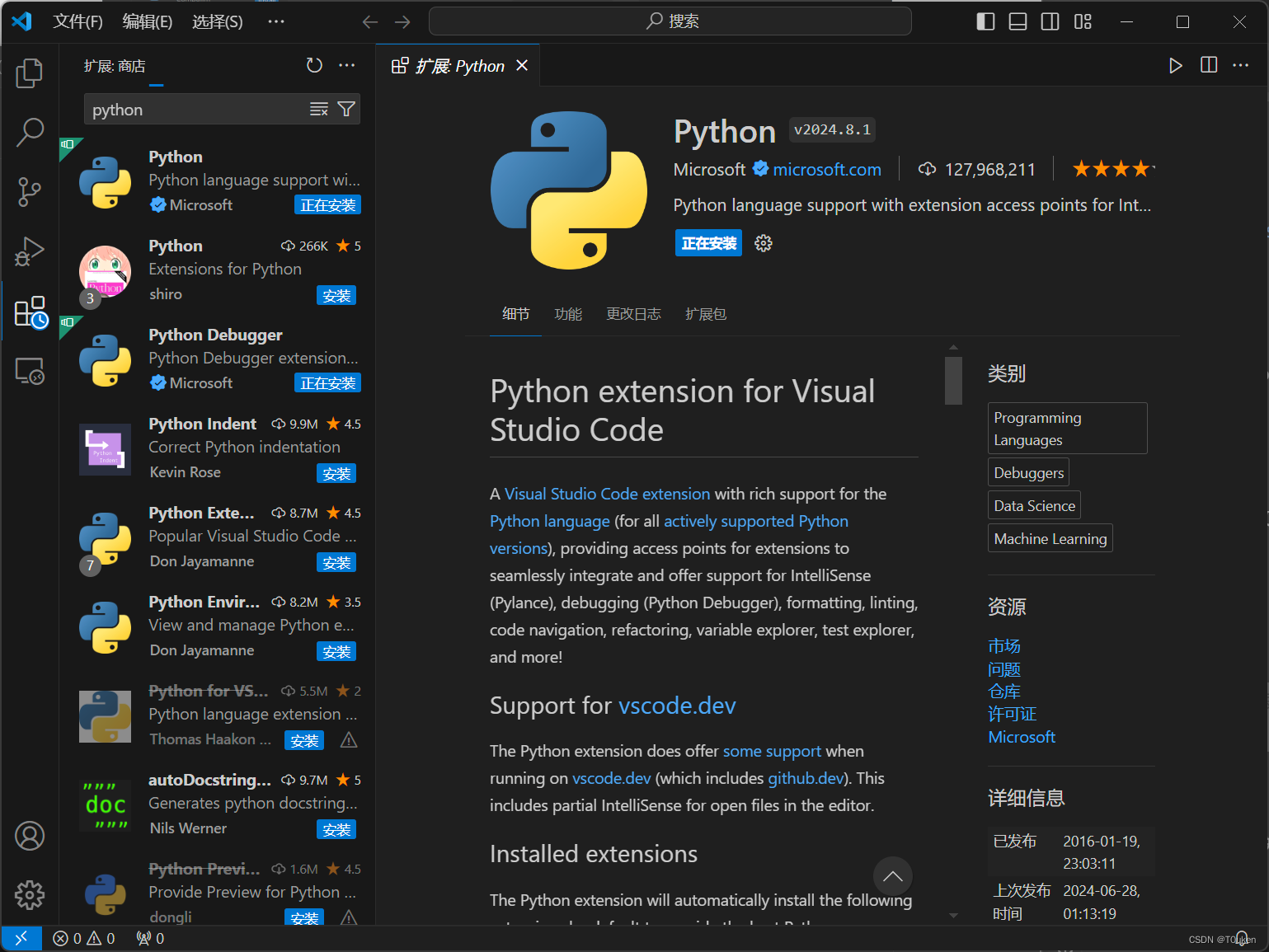

一、环境配置

%%capture captured_output

# 实验环境已经预装了mindspore==2.2.14,如需更换mindspore版本,可更改下面mindspore的版本号

!pip uninstall mindspore -y

!pip install -i https://pypi.mirrors.ustc.edu.cn/simple mindspore==2.2.14

# 该案例在 mindnlp 0.3.1 版本完成适配,如果发现案例跑不通,可以指定mindnlp版本,执行`!pip install mindnlp==0.3.1`

!pip install mindnlp==0.3.1

!pip install jieba

%env HF_ENDPOINT=https://hf-mirror.com输出:

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Requirement already satisfied: mindnlp==0.3.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (0.3.1)

Requirement already satisfied: mindspore in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (2.2.14)

Requirement already satisfied: tqdm in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (4.66.4)

Requirement already satisfied: requests in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (2.32.3)

Requirement already satisfied: datasets in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (2.20.0)

Requirement already satisfied: evaluate in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (0.4.2)

Requirement already satisfied: tokenizers in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (0.19.1)

Requirement already satisfied: safetensors in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (0.4.3)

Requirement already satisfied: sentencepiece in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (0.2.0)

Requirement already satisfied: regex in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (2024.5.15)

Requirement already satisfied: addict in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (2.4.0)

Requirement already satisfied: ml-dtypes in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (0.4.0)

Requirement already satisfied: pyctcdecode in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (0.5.0)

Requirement already satisfied: jieba in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (0.42.1)

Requirement already satisfied: pytest==7.2.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp==0.3.1) (7.2.0)

Requirement already satisfied: attrs>=19.2.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pytest==7.2.0->mindnlp==0.3.1) (23.2.0)

Requirement already satisfied: iniconfig in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pytest==7.2.0->mindnlp==0.3.1) (2.0.0)

Requirement already satisfied: packaging in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pytest==7.2.0->mindnlp==0.3.1) (23.2)

Requirement already satisfied: pluggy<2.0,>=0.12 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pytest==7.2.0->mindnlp==0.3.1) (1.5.0)

Requirement already satisfied: exceptiongroup>=1.0.0rc8 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pytest==7.2.0->mindnlp==0.3.1) (1.2.0)

Requirement already satisfied: tomli>=1.0.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pytest==7.2.0->mindnlp==0.3.1) (2.0.1)

Requirement already satisfied: filelock in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp==0.3.1) (3.15.3)

Requirement already satisfied: numpy>=1.17 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp==0.3.1) (1.26.4)

Requirement already satisfied: pyarrow>=15.0.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp==0.3.1) (16.1.0)

Requirement already satisfied: pyarrow-hotfix in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp==0.3.1) (0.6)

Requirement already satisfied: dill<0.3.9,>=0.3.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp==0.3.1) (0.3.8)

Requirement already satisfied: pandas in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp==0.3.1) (2.2.2)

Requirement already satisfied: xxhash in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp==0.3.1) (3.4.1)

Requirement already satisfied: multiprocess in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp==0.3.1) (0.70.16)

Requirement already satisfied: fsspec<=2024.5.0,>=2023.1.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from fsspec[http]<=2024.5.0,>=2023.1.0->datasets->mindnlp==0.3.1) (2024.5.0)

Requirement already satisfied: aiohttp in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp==0.3.1) (3.9.5)

Requirement already satisfied: huggingface-hub>=0.21.2 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp==0.3.1) (0.23.4)

Requirement already satisfied: pyyaml>=5.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp==0.3.1) (6.0.1)

Requirement already satisfied: charset-normalizer<4,>=2 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from requests->mindnlp==0.3.1) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from requests->mindnlp==0.3.1) (3.7)

Requirement already satisfied: urllib3<3,>=1.21.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from requests->mindnlp==0.3.1) (2.2.2)

Requirement already satisfied: certifi>=2017.4.17 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from requests->mindnlp==0.3.1) (2024.6.2)

Requirement already satisfied: protobuf>=3.13.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindspore->mindnlp==0.3.1) (5.27.1)

Requirement already satisfied: asttokens>=2.0.4 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindspore->mindnlp==0.3.1) (2.0.5)

Requirement already satisfied: pillow>=6.2.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindspore->mindnlp==0.3.1) (10.3.0)

Requirement already satisfied: scipy>=1.5.4 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindspore->mindnlp==0.3.1) (1.13.1)

Requirement already satisfied: psutil>=5.6.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindspore->mindnlp==0.3.1) (5.9.0)

Requirement already satisfied: astunparse>=1.6.3 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindspore->mindnlp==0.3.1) (1.6.3)

Requirement already satisfied: pygtrie<3.0,>=2.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pyctcdecode->mindnlp==0.3.1) (2.5.0)

Requirement already satisfied: hypothesis<7,>=6.14 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pyctcdecode->mindnlp==0.3.1) (6.104.2)

Requirement already satisfied: six in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from asttokens>=2.0.4->mindspore->mindnlp==0.3.1) (1.16.0)

Requirement already satisfied: wheel<1.0,>=0.23.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from astunparse>=1.6.3->mindspore->mindnlp==0.3.1) (0.43.0)

Requirement already satisfied: aiosignal>=1.1.2 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from aiohttp->datasets->mindnlp==0.3.1) (1.3.1)

Requirement already satisfied: frozenlist>=1.1.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from aiohttp->datasets->mindnlp==0.3.1) (1.4.1)

Requirement already satisfied: multidict<7.0,>=4.5 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from aiohttp->datasets->mindnlp==0.3.1) (6.0.5)

Requirement already satisfied: yarl<2.0,>=1.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from aiohttp->datasets->mindnlp==0.3.1) (1.9.4)

Requirement already satisfied: async-timeout<5.0,>=4.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from aiohttp->datasets->mindnlp==0.3.1) (4.0.3)

Requirement already satisfied: typing-extensions>=3.7.4.3 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from huggingface-hub>=0.21.2->datasets->mindnlp==0.3.1) (4.11.0)

Requirement already satisfied: sortedcontainers<3.0.0,>=2.1.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from hypothesis<7,>=6.14->pyctcdecode->mindnlp==0.3.1) (2.4.0)

Requirement already satisfied: python-dateutil>=2.8.2 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pandas->datasets->mindnlp==0.3.1) (2.9.0.post0)

Requirement already satisfied: pytz>=2020.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pandas->datasets->mindnlp==0.3.1) (2024.1)

Requirement already satisfied: tzdata>=2022.7 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pandas->datasets->mindnlp==0.3.1) (2024.1)

[notice] A new release of pip is available: 24.1 -> 24.1.1

[notice] To update, run: python -m pip install --upgrade pip

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Requirement already satisfied: jieba in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (0.42.1)

[notice] A new release of pip is available: 24.1 -> 24.1.1

[notice] To update, run: python -m pip install --upgrade pip

env: HF_ENDPOINT=https://hf-mirror.com导入os mindspore dataset nn _legacy等模块

import os

import mindspore

from mindspore.dataset import text, GeneratorDataset, transforms

from mindspore import nn

from mindnlp.dataset import load_dataset

from mindnlp._legacy.engine import Trainer, Evaluator

from mindnlp._legacy.engine.callbacks import CheckpointCallback, BestModelCallback

from mindnlp._legacy.metrics import Accuracy输出:

Building prefix dict from the default dictionary ...

Dumping model to file cache /tmp/jieba.cache

Loading model cost 1.027 seconds.

Prefix dict has been built successfully.二、加载训练数据集和测试数据集

imdb_ds = load_dataset('imdb', split=['train', 'test'])

imdb_train = imdb_ds['train']

imdb_test = imdb_ds['test']输出:

Downloading readme:-- 7.81k/? [00:00<00:00, 478kB/s]

Downloading data: 100%---------------- 21.0M/21.0M [00:09<00:00, 2.43MB/s]

Downloading data: 100%---------------- 20.5M/20.5M [00:10<00:00, 1.95MB/s]

Downloading data: 100%---------------- 42.0M/42.0M [00:16<00:00, 2.69MB/s]

Generating train split: 100%---------------- 25000/25000 [00:00<00:00, 102317.15 examples/s]

Generating test split: 100%---------------- 25000/25000 [00:00<00:00, 130128.57 examples/s]

Generating unsupervised split: 100%---------------- 50000/50000 [00:00<00:00, 140883.29 examples/s]imdb_train.get_dataset_size()输出:

25000三、预处理数据集

import numpy as np

def process_dataset(dataset, tokenizer, max_seq_len=512, batch_size=4, shuffle=False):

is_ascend = mindspore.get_context('device_target') == 'Ascend'

def tokenize(text):

if is_ascend:

tokenized = tokenizer(text, padding='max_length', truncation=True, max_length=max_seq_len)

else:

tokenized = tokenizer(text, truncation=True, max_length=max_seq_len)

return tokenized['input_ids'], tokenized['attention_mask']

if shuffle:

dataset = dataset.shuffle(batch_size)

# map dataset

dataset = dataset.map(operations=[tokenize], input_columns="text",

output_columns=['input_ids', 'attention_mask'])

dataset = dataset.map(operations=transforms.TypeCast(mindspore.int32),

input_columns="label", output_columns="labels")

# batch dataset

if is_ascend:

dataset = dataset.batch(batch_size)

else:

dataset = dataset.padded_batch(batch_size,

pad_info={'input_ids': (None, tokenizer.pad_token_id),

'attention_mask': (None, 0)})

return datasetfrom mindnlp.transformers import GPTTokenizer

# tokenizer

gpt_tokenizer = GPTTokenizer.from_pretrained('openai-gpt')

# add sepcial token: <PAD>

special_tokens_dict = {

"bos_token": "<bos>",

"eos_token": "<eos>",

"pad_token": "<pad>",

}

num_added_toks = gpt_tokenizer.add_special_tokens(special_tokens_dict)输出:

连接失败,不知是否openai关闭服务的原因。

【从此往下,执行不下去了】

100%---------------- 25.0/25.0 [00:00<00:00, 2.39kB/s]

---------------- 533k/0.00 [00:35<00:00, 49.3kB/s]

Failed to download: HTTPSConnectionPool(host='hf-mirror.com', port=443): Read timed out.

Retrying... (attempt 0/5)

---------------- 263k/0.00 [00:08<00:00, 57.6kB/s]

---------------- 378k/0.00 [00:41<00:00, 5.35kB/s]

Failed to download: HTTPSConnectionPool(host='hf-mirror.com', port=443): Read timed out.

Retrying... (attempt 0/5)

---------------- 69.6k/0.00 [00:01<00:00, 35.7kB/s]

---------------- 684k/0.00 [00:45<00:00, 8.49kB/s]

Failed to download: HTTPSConnectionPool(host='hf-mirror.com', port=443): Read timed out.

Retrying... (attempt 0/5)

---------------- 559k/0.00 [00:36<00:00, 27.3kB/s]

---------------- 656/? [00:00<00:00, 62.5kB/s]

# split train dataset into train and valid datasets

imdb_train, imdb_val = imdb_train.split([0.7, 0.3])

dataset_train = process_dataset(imdb_train, gpt_tokenizer, shuffle=True)

dataset_val = process_dataset(imdb_val, gpt_tokenizer)

dataset_test = process_dataset(imdb_test, gpt_tokenizer)next(dataset_train.create_tuple_iterator())输出:

[Tensor(shape=[4, 512], dtype=Int64, value=

[[ 11, 250, 15 ... 3, 242, 3],

[ 5, 23, 5 ... 40480, 40480, 40480],

[ 14, 3, 5 ... 243, 8, 18073],

[ 7, 250, 3 ... 40480, 40480, 40480]]),

Tensor(shape=[4, 512], dtype=Int64, value=

[[1, 1, 1 ... 1, 1, 1],

[1, 1, 1 ... 0, 0, 0],

[1, 1, 1 ... 1, 1, 1],

[1, 1, 1 ... 0, 0, 0]]),

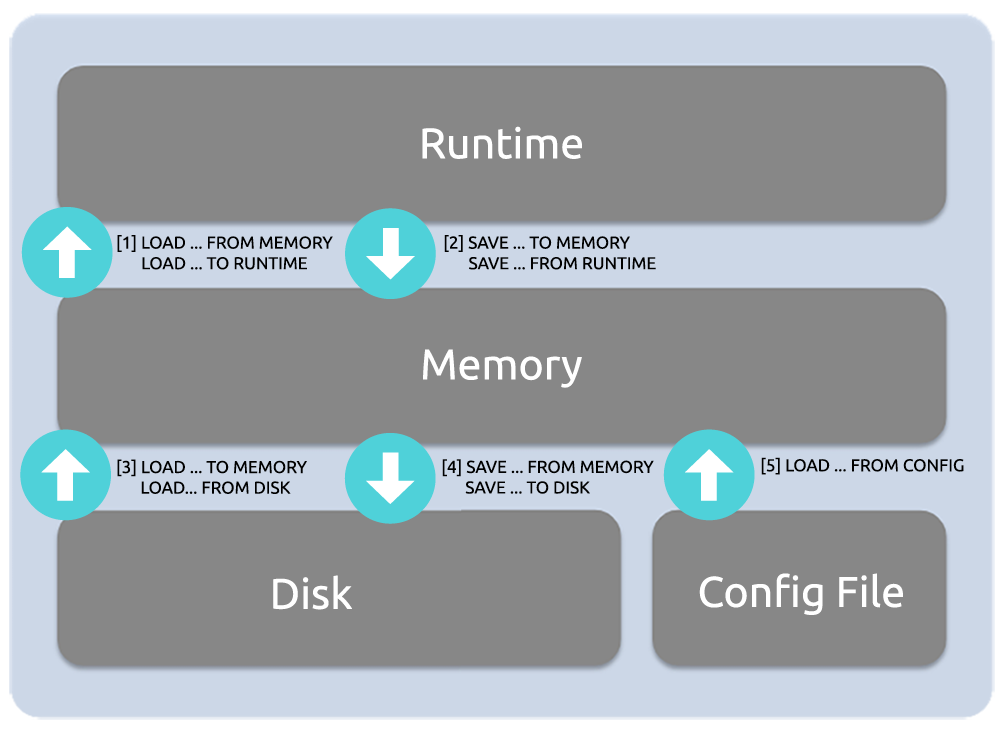

Tensor(shape=[4], dtype=Int32, value= [0, 1, 0, 1])]from mindnlp.transformers import GPTForSequenceClassification

from mindspore.experimental.optim import Adam

# set bert config and define parameters for training

model = GPTForSequenceClassification.from_pretrained('openai-gpt', num_labels=2)

model.config.pad_token_id = gpt_tokenizer.pad_token_id

model.resize_token_embeddings(model.config.vocab_size + 3)

optimizer = nn.Adam(model.trainable_params(), learning_rate=2e-5)

metric = Accuracy()

# define callbacks to save checkpoints

ckpoint_cb = CheckpointCallback(save_path='checkpoint', ckpt_name='gpt_imdb_finetune', epochs=1, keep_checkpoint_max=2)

best_model_cb = BestModelCallback(save_path='checkpoint', ckpt_name='gpt_imdb_finetune_best', auto_load=True)

trainer = Trainer(network=model, train_dataset=dataset_train,

eval_dataset=dataset_train, metrics=metric,

epochs=1, optimizer=optimizer, callbacks=[ckpoint_cb, best_model_cb],

jit=False)输出:

100%---------------- 457M/457M [04:06<00:00, 2.87MB/s]

100%---------------- 74.0/74.0 [00:00<00:00, 4.28kB/s]四、训练

trainer.run(tgt_columns="labels")五、评估

evaluator = Evaluator(network=model, eval_dataset=dataset_test, metrics=metric)

evaluator.run(tgt_columns="labels")